17 Apr

Perhaps you might have heard the term service mesh in your recent conversations with co-workers, at a tech conference or seen it in passing on the internet and wondered what all the fuss is about. It’s certainly one of the buzz words in today’s technological landscape. It’s impossible to develop modern applications in today’s world without developing microservices. As your use of microservices grow, you begin to notice certain functionalities that need to developed in each service. Such functionalities include security, retries, observability, service discovery, tracing, etc. It is possible to encapsulate all those functionalities in a common set of libraries that can then be shared by all services, but that will mean a team within your organization has to own and maintain that, adding to your existing technical debt. In addition, such libraries won’t be language agnostic and would require you develop and maintain a separate one for each language of choice used in your organization. Won’t it be nice to have such abstraction provided without incurring additional technical debt? Service mesh to the rescue!

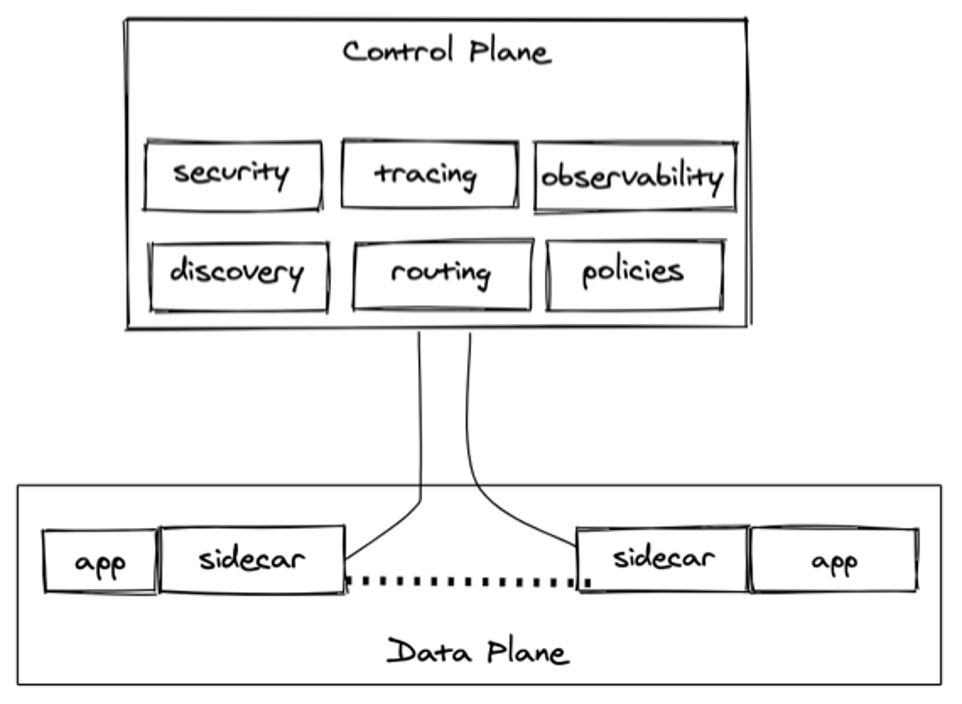

Service mesh simplify the process of connecting, protecting, and monitoring microservices. A service mesh is an abstraction layer that takes care of service-to-service communications, security, tracing, observability, and resiliency in modern, cloud-native applications. By providing such an abstraction, it decouples service-to-service communication from applications, allowing dynamic and predictable configurations at runtime. The mesh is built with resiliency in mind, assuming the underlying infrastructure and network fabric the services run on will fail and when they do, automatic retry attempts will be made on your services behalf. In recent years, it has become a critical component in a cloud native stack as more and more fortune 500 companies have embraced it in production. Service mesh functionality is achieved by two core tenants; control plane and data plane. The control plane encapsulates all cross cutting concerns and functionality. It communicates with the data plane which consists of a lightweight network proxy often referred to as a sidecarand your service.Each copy of the service is colocated with a sidecar proxy responsible for handling all communications with the control plane and all traffic to and from the service. See the diagram below.

Service Mesh: High Level

This brings about a BIG win as that implies existing applications and services can immediately benefit from service mesh abstraction as no additional code needs to be written to take advantage of it.

Let’s take a close look at an example to illustrate a simple flow through a service mesh. We will assume an application that is comprised on 3 services; A, B and C. A relies on communications with B and C to fulfill certain application functionality.

When a request comes for service A through the service mesh:

- The service mesh uses dynamic routing rules to decipher the intended service target. Should the traffic be routed to the service in our local datacenter or the copy located in our cloud deployment? Which version of that service should fulfill the request? All those routing rules are configurable at runtime without needing to restart or redeploy the service.

- Once the target service is determined, an instance is pulled from the instance pool through service discovery and a variety of factors based on metrics already observed by the mesh over recent requests. For example, the instance chosen is also likely to be the instance that will return the fastest response.

- The request is sent to the instance with telemetry such as latency, response code, error code, 99th percentile, etc published to the metrics server.

- The request will be automatically retried if the instance is unresponsive, fails or down until the deadline of the request has elapsed. Alternatively, if the request errors consistently, the service mesh will remove that instance from the pool of instances and periodically retry it offline.

- If the request requires that A makes use of other services B or C to satisfy the in-flight request, the call is made via the sidecar; which helps ensure the network chattiness and hops are reduced, providing more reliable service-to-service communications.

There are several projects on the Cloud Native Computing Foundation (CNCF) that aim to address this need. The most popular include Istio, Linkerd, Kuma, Consul, Zuul and Open Service Mesh. Over the next couple of posts, we will explore some of these implementations, how we can apply them to an existing application and the pros and cons.

Recent Comments